Earlier this year, the Spring Team released the beta version of Spring Native, giving Spring developers the chance to try out GraalVM and see what all the fuss was about. Quarkus and Micronaut both support GraalVM and cloud native development, so it was about time for Spring to join in.

Over the next three posts, we will explore Spring Cloud Function, Spring Native, and AWS Lambda with DynamoDB. I will not go in depth on each of the technologies as they are better documented elsewhere:

Update: With the release of Spring Native 0.11.X, FunctionalSpringApplication is no longer supported. I have updated the code and Github repo to reflect these changes.

Table of Contents:

- Part 1 - The Code

- Part 2 - The Build

- Part 3 - The Deploy

Why Cloud Function and Native?

If you are not new to Java and Spring Boot development, you would know that the JVM can use quite a bit of resources for simple applications. Spring Boot has a slow startup time, due to configuration at runtime. This isn't too big of a problem if you are working with a monolith, or microservices that do not need to scale quickly and resources are not an issue. When moving to the cloud however, these things matter.

Enter GraalVM and Spring Native. Java applications can be compiled into standalone executables (native images) and run on GraalVM. This gives our applications near instant startup times and much lower resource usage. The drawback however is that compiling takes much longer and requires 6GB+ RAM. The result of compilation though, is well worth the extra effort.

The below table shows startup times and memory used for the non-native and native versions of the code in this series.

| Version | Memory | Cold Start | Warm Start | Memory Used |

|---|---|---|---|---|

| Non-Native | 512 MB | 4258.94 ms | 40 - 80 ms | 204 MB |

| Native | 512 MB | 179.03 ms | 5 - 10 ms | 116 MB |

| Native | 128 MB | 722.57 ms | 5 - 10 ms | 116 MB |

The Scenario

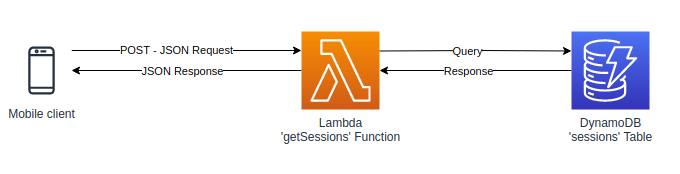

You are creating a mobile game and would like to display the results of past game sessions with the player. Each session will have a list of players, their scores, as well as a timestamp when the session took place. As you do not need a long running process, you decide that a serverless function makes the most sense. AWS Lambda is a great candidate for this and we can use DynamoDB as a data store.

We will use Spring Cloud Function to create our Function-as-a-Service, then compile with Spring Native. Once we have a deployment package, we will create a DynamoDB Table and Lambda Function to deploy to.

Requirements

Before we begin, make sure you have the following:

- Java 11

- Docker

- 6GB+ RAM - For native-image build tools

- AWS account and CLI

Project Setup

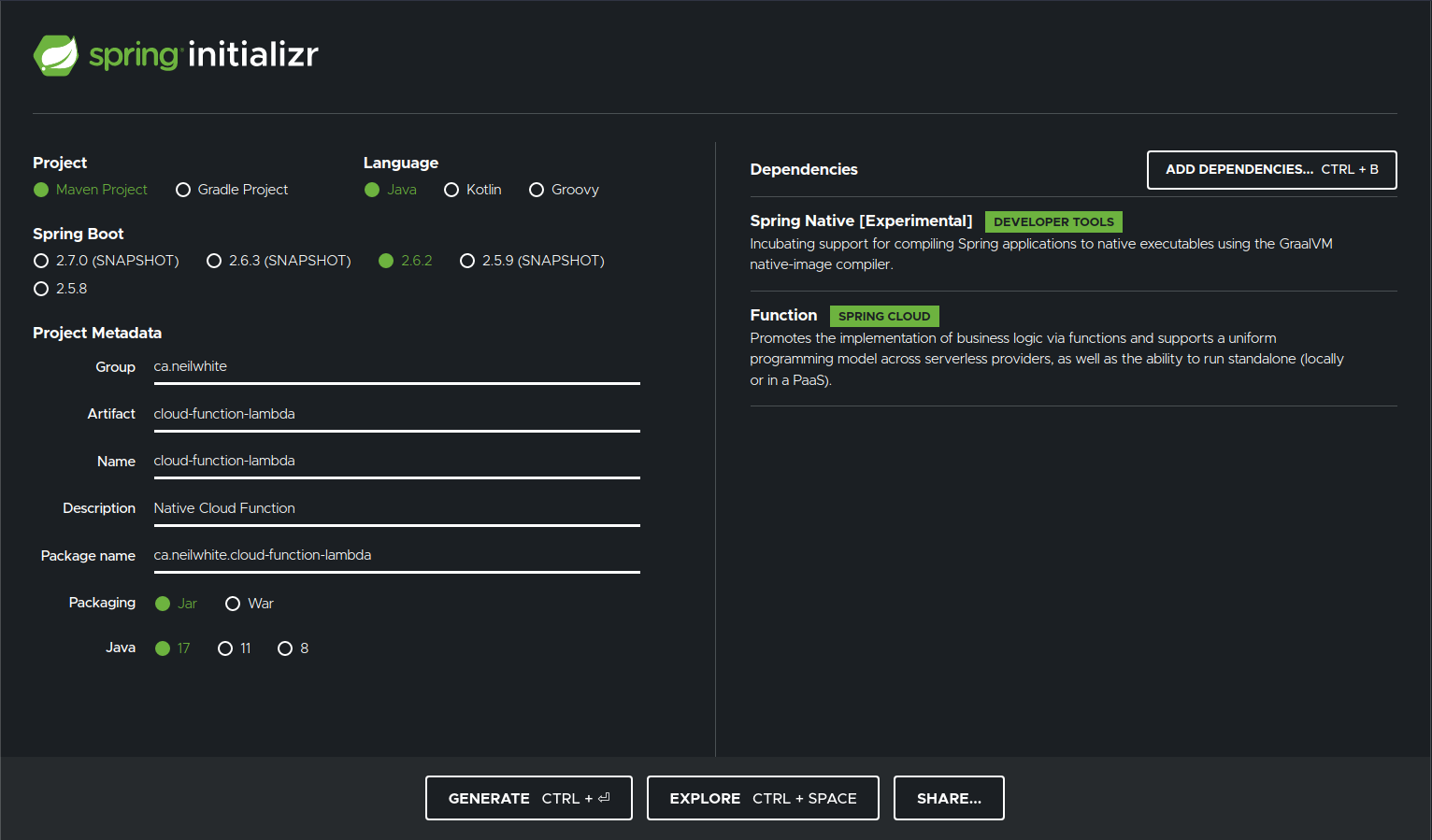

First head over to start.spring.io to create a new project.

With our new project created, open the pom.xml and a few new dependencies:

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-function-adapter-aws</artifactId>

<version>3.2.1</version>

</dependency>

<dependency>

<groupId>software.amazon.awssdk</groupId>

<artifactId>dynamodb</artifactId>

<version>2.17.102</version>

</dependency>

<dependency>

<groupId>software.amazon.awssdk</groupId>

<artifactId>protocol-core</artifactId>

<version>2.17.102</version>

</dependency>spring-web and spring-cloud-function-adapter-aws handles the AWS platform specifics for us when deploying and running our function, while dynamodb let's us work with AWS DynamoDB.

We will also replace the profile that is auto generated for us by spring initializr:

<profile>

<id>native</id>

<build>

<finalName>cloud-function-dynamodb-lambda</finalName>

<plugins>

<!-- Builds the native image -->

<plugin>

<groupId>org.graalvm.buildtools</groupId>

<artifactId>native-maven-plugin</artifactId>

<version>0.9.9</version>

<executions>

<execution>

<id>test-native</id>

<goals>

<goal>test</goal>

</goals>

<phase>test</phase>

</execution>

<execution>

<id>build-native</id>

<goals>

<goal>build</goal>

</goals>

<phase>package</phase>

</execution>

</executions>

<configuration>

<buildArgs>--enable-url-protocols=http</buildArgs>

</configuration>

</plugin>

<!-- Creates the .zip with bootstrap and native image -->

<plugin>

<artifactId>maven-assembly-plugin</artifactId>

<executions>

<execution>

<id>native</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

<inherited>false</inherited>

</execution>

</executions>

<configuration>

<descriptors>

<descriptor>src/assembly/native.xml</descriptor>

</descriptors>

</configuration>

</plugin>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<configuration>

<classifier>exec</classifier>

</configuration>

</plugin>

</plugins>

</build>

</profile>The first change to the profile is the addition of buildArgs for native-image. Without the flag --enable-url-protocols=http, AWS won't be able to send events to our function. The second change is the maven-assembly-plugin, which bundles our native-image and a bootstrap file into a .zip for deploying to AWS.

The final thing to do before we look at the code is to create two directories under src, assembly and shell. Under assembly, we will add an xml file that tells maven-assembly-plugin how to create our deployment package. Name this file native.xml.

<assembly xmlns="http://maven.apache.org/plugins/maven-assembly-plugin/assembly/1.1.2"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/plugins/maven-assembly-plugin/assembly/1.1.2 https://maven.apache.org/xsd/assembly-1.1.2.xsd">

<id>native</id>

<formats>

<format>zip</format>

</formats>

<baseDirectory></baseDirectory>

<fileSets>

<fileSet>

<directory>src/shell</directory>

<outputDirectory>/</outputDirectory>

<useDefaultExcludes>true</useDefaultExcludes>

<fileMode>0775</fileMode>

<includes>

<include>bootstrap</include>

</includes>

</fileSet>

<fileSet>

<directory>target</directory>

<outputDirectory>/</outputDirectory>

<useDefaultExcludes>true</useDefaultExcludes>

<fileMode>0775</fileMode>

<includes>

<!-- Change me to match artifactId -->

<include>cloud-function-dynamodb-lambda</include>

</includes>

</fileSet>

</fileSets>

</assembly>And finally, under shell, add a file called bootstrap. This file tells AWS Lambda how to run our function.

#!/bin/sh

cd ${LAMBDA_TASK_ROOT:-.}

./cloud-function-dynamodb-lambdaTime to Code

Now that we have all the project setup out of the way, we can move on to the actual code. In this section we will create our model classes and the function itself.

The project structure should look like this at the end:

src

├── assembly

│ └── native.xml

├── main

│ └── java

│ └── ca.neilwhite.cloudfunctiondynamodblambda

│ ├── CloudFunctionDynamodbLambdaApplication.java

│ ├── GetSessions.java

│ └── models

│ ├── Request.java

│ ├── Response.java

│ └── Session.java

└── shell

└── bootstrapThe Models

First we will start with the Session class. This will be modelled after our DynamoDB table.

| Partition Key | Sort Key | Attributes | ||

|---|---|---|---|---|

| userId | sessionId | timestamp | participants | results |

For the properties, we won't be using userId, as it is used for the DynamoDB query only.

The Session class also has a static method to convert the values from DynamoDB into our object.

public class Session implements Serializable {

private String sessionId;

private Long timestamp;

private List<String> participants;

private Map<String, Integer> results;

public static Session from(Map<String, AttributeValue> values){

Session session = new Session();

session.setSessionId(values.get("sessionId").s());

session.setTimestamp(Long.parseLong(values.get("timestamp").n()));

session.setParticipants(values.get("participants").ss());

Map<String, AttributeValue> resultValues = values.get("results").m();

Map<String, Integer> results = new HashMap<>();

resultValues.forEach((k, v) -> results.put(k, Integer.parseInt(v.n())));

session.setResults(results);

return session;

}

// constructors, getters & setters

}With the Session class done, we can look at the Request and Response classes. These simple classes represent the JSON objects we expect when interacting with the API.

-- Request --

{

"userId": "player1"

}

-- Response --

{

"sessions": [

{

"sessionId": "abc123",

"timestamp": 1632936430,

"participants": [

"player1",

"player2",

"player3"

],

"results": {

"player1": 1,

"player2": 3,

"player3": 5

}

}

]

}The Request class:

public class Request implements Serializable {

private String userId;

// constructors, getters & setters

}The Response class:

public class Response implements Serializable {

private List<Session> sessions;

// constructors, getters & setters

}The Application Class

With the Spring Native 0.11.X update, FunctionalSpringApplication is no longer supported, which was required in Spring Native 0.11.X. With this new change, we can write our Application class like any other Spring Boot application.

@NativeHint

@SerializationHint(types = {Request.class, Response.class, Session.class})

@SpringBootApplication

public class CloudFunctionDynamodbLambdaApplication {

Region awsRegion = Region.US_EAST_1;

public static void main(String[] args) {

SpringApplication.run(CloudFunctionDynamodbLambdaApplication.class, args);

}

@Bean

public DynamoDbClient dynamoDbClient() {

return DynamoDbClient.builder().region(awsRegion).build();

}

@Bean

public String tableName(){

return "sessions";

}

}The Function

A note on AWS Java SDK

The AWS SDK v2 comes with an enhanced DynamoDB client that makes working with tables so much easier. You can create a bean of the table itself and act on it directly instead of going through the DynamoDbClient. But, when it comes to native compilation, the enhanced client does not work. See this Github issue for details. Hopefully this is updated in the future and I will update this post if it does.

Now we can look at the logic of our function:

@Component

public class GetSessions implements Function<Request, Response> {

private final String tableName;

private final DynamoDbClient dynamoDbClient;

public GetSessions(String tableName, DynamoDbClient dynamoDbClient) {

this.tableName = tableName;

this.dynamoDbClient = dynamoDbClient;

}

@Override

public Response apply(Request request) {

String userId = request.getUserId().toLowerCase();

Map<String, AttributeValue> expressionValues = new HashMap<>();

expressionValues.put(":userId", AttributeValue.builder().s(userId).build());

QueryRequest queryRequest = QueryRequest.builder()

.tableName(tableName)

.keyConditionExpression("userId = :userId")

.expressionAttributeValues(expressionValues).build();

List<Map<String, AttributeValue>> queryResponse = dynamoDbClient.query(queryRequest).items();

List<Session> sessions = queryResponse.isEmpty() ? List.of()

: queryResponse.stream()

.map(Session::from)

.collect(Collectors.toList());

return new Response(sessions);

}

}Our class implements Function<Request, Response> which helps Spring Cloud Function wires up an HTTP endpoint for us.

The main functionality is in the apply function. This is called whenever a request comes in. We build up a query to send DynamoDB to retrieve a list of sessions the passed in userId has been involved in. Either an empty list or a list of sessions is then passed back out.

Recap of Part 1

In this post, I have shown how to set up a project to create a Function-as-a-Service for AWS using Spring Cloud Function and Spring Native.

Next we will look at compiling our code to a native image.

Full source code can be found on my Github

Click here for Part 2 - The Build